Page 25 - EETimes Europe June 2021

P. 25

EE|Times EUROPE 25

Tools Move Up the Value Chain to Take the Mystery Out of Vision AI

The Kria SOMs also enable customization and optimization for time, for embedded-vision systems development. The cloud-based

embedded developers with support for standard Yocto-based approaches offer tools that “democratize” the ability to create and train

PetaLinux. models and evaluate hardware for extremely rapid deployment onto

Xilinx said a collaboration with Canonical is also in progress to embedded devices. And the approach that offers a module, or reference

provide support for Ubuntu Linux, the highly popular Linux distribu- design, with an app library allows AI developers to use existing tools to

tion used by AI developers. This offers widespread familiarity with AI create embedded-vision systems quickly.

developers and interoperability with existing applications. These are all moving us to a different way of looking at development

Customers can develop in either environment and take either boards and tools. They take the mystery out of embedded vision by

approach to production. Both environments will come pre-built with moving up the value chain, leaving the foundational-level work to the

a software infrastructure and helpful utilities. vendors’ tools and modules. ■

We’ve highlighted three of the approaches that vendors are taking

to address the skills and knowledge gap, as well as the deployment Nitin Dahad is editor-in-chief of Embedded.

SPECIAL REPORT: EMBEDDED VISION

Synaptics Makes a Natural Progression

to Edge AI

By Gina Roos

t one time, Synaptics Inc. was best

known for its interface products,

including fingerprint sensors,

A touchpads, and display drivers for

PCs and mobile phones. Today, propelled

by several acquisitions over the past several

years, the company is making a big push into

consumer IoT as well as computer-vision and

artificial-intelligence solutions at the edge.

Synaptics sees opportunities in computer

vision across all markets and recently launched

edge-AI processors that target real-time

computer-vision and multimedia applications.

The company’s recent AI roadmap

spans from enhancing the image quality of

high-resolution cameras using the high-

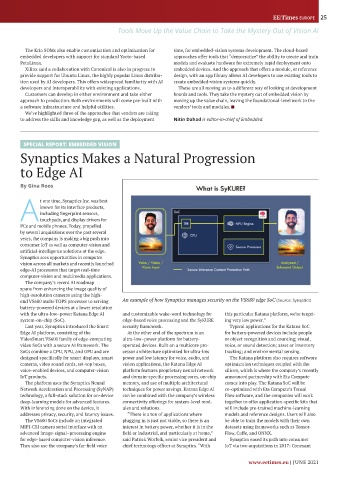

end VS680 multi-TOPS processor to serving An example of how Synaptics manages security on the VS680 edge SoC (Source: Synaptics)

battery-powered devices at a lower resolution

with the ultra-low–power Katana Edge AI and customizable wake-word technology for this particular Katana platform, we’re target-

system-on-chip (SoC). edge-based voice processing and the SyKURE ing very low power.”

Last year, Synaptics introduced the Smart security framework. Typical applications for the Katana SoC

Edge AI platform, consisting of the At the other end of the spectrum is an for battery-powered devices include people

VideoSmart VS600 family of edge-computing ultra-low–power platform for battery- or object recognition and counting; visual,

video SoCs with a secure AI framework. The operated devices. Built on a multicore pro- voice, or sound detection; asset or inventory

SoCs combine a CPU, NPU, and GPU and are cessor architecture optimized for ultra-low tracking; and environmental sensing.

designed specifically for smart displays, smart power and low latency for voice, audio, and The Katana platform also requires software

cameras, video sound cards, set-top boxes, vision applications, the Katana Edge AI optimization techniques coupled with the

voice-enabled devices, and computer-vision platform features proprietary neural network silicon, which is where the company’s recently

IoT products. and domain-specific processing cores, on-chip announced partnership with Eta Compute

The platform uses the Synaptics Neural memory, and use of multiple architectural comes into play. The Katana SoC will be

Network Acceleration and Processing (SyNAP) techniques for power savings. Katana Edge AI co-optimized with Eta Compute’s Tensai

technology, a full-stack solution for on-device can be combined with the company’s wireless Flow software, and the companies will work

deep-learning models for advanced features. connectivity offerings for system-level mod- together to offer application-specific kits that

With inferencing done on the device, it ules and solutions. will include pre-trained machine-learning

addresses privacy, security, and latency issues. “There is a ton of applications where models and reference designs. Users will also

The VS600 SoCs include an integrated plugging in is just not viable, so there is an be able to train the models with their own

MIPI-CSI camera serial interface with an interest in battery power, whether it is in the datasets using frameworks such as Tensor-

advanced image-signal–processing engine field or industrial, and particularly at home,” Flow, Caffe, and ONNX.

for edge-based computer-vision inference. said Patrick Worfolk, senior vice president and Synaptics eased its path into consumer

They also use the company’s far-field voice chief technology officer at Synaptics. “With IoT via two acquisitions in 2017: Conexant

www.eetimes.eu | JUNE 2021