Page 22 - EE Times Europe Magazine – November 2023

P. 22

22 EE|Times EUROPE

OPINION | GREENER ELECTRONICS | PROCESSING

The Mind-Boggling

Cost of Generative AI MEMORY WALL’S IMPLICATIONS FOR

GENERATIVE AI

Moving terabytes of data at high speed

Ownership between memory and computing elements

requires data-transfer bandwidths of

terabytes/second, which is hardly practica-

ble. If the processor does not receive data on

By Lauro Rizzatti, Vsora time, it sits idle, decreasing its efficiency. As

recently reported, the efficiency of running

ChatGPT-4 on leading-edge hardware deep

Advancements in large language models (LLMs)—software dives to 3% or less. A generative AI (GenAI)

algorithms driven by transformers—have not been met with similar accelerator with 1-petaOPS nominal per-

progress in the computing hardware tasked to execute them. formance but actual 3% efficiency delivers a

ChatGPT-4’s LLM, for example, exceeds 1 trillion parameters, meager 30 teraOPS. Basically, a very costly

posing a challenge for current storage capabilities and performance processor designed to run these algorithms

requirements. Memory storage is already reaching hundreds of remains inactive 97% of the time.

gigabytes. Processing throughput needs multiple petaOPS To compensate for the low efficiency in

(1 petaOPS = 10 operations per second) to deliver query responses processing model training and inference in

15

in an acceptable timeframe, typically less than a couple of seconds. data centers, cloud providers add more hard-

While model training and inference share performance requirements, they differ on four ware to perform the same task. The approach

other characteristics: memory, latency, power consumption and cost (Table 1). escalates the cost and multiplies power

The model training and inference scenario today is carried out on extensive comput- consumption.

ing farms. Each job runs for a long time and consumes a sizable amount of electric power Obviously, such a method is not applicable

that produces copious heat at mind-boggling costs. Nonetheless, the farms deliver what is for inference at the edge.

expected of them.

Training a GPT-4 model on FP32 or FP64 arithmetic may require more than 1 trillion bits ESTIMATED COST ANALYSIS OF GenAI IN

stored on the fastest versions of high-bandwidth–memory (HBM) DRAM. The performance DATA CENTERS PROCESSING GPT-4

necessary to train such a massive model calls for tens of petaOPS running for weeks—an McKinsey estimated that in 2022, Google

annoyance, but not a roadblock. To accomplish the job, computing farms consume megawatts search processed 3.3 trillion queries

with total cost of ownership in the hundreds of billions of dollars. Again, not a perfect sce- (~100,000 queries/second) at a cost of

nario, but a working solution. $US0.002 per query, considered to be the

Via-à-vis model training, model inference, usually performed on FP8 arithmetic, which benchmark. The total annual cost amounted

still produces large amounts of data (in the hundreds of billions of bits), must deliver a query to US$6.6 billion. Google is not charging fees

response with a latency of no more than a couple of seconds to keep the user’s attention and for the search service. Instead, it covers the

acceptance. Furthermore, considering that a vast potential market for inference encompasses cost via advertising revenues—for now.

mobile applications at the edge, a viable solution must provide throughput of more than The same McKinsey analysis stated that the

1 petaOPS with implementation efficiency exceeding 50%. ChatGPT-3 cost per query hovers at around

To ensure mobility, the solution must minimize energy consumption, possibly to less than US$.03 per query, 15× larger than the bench-

50 W/petaOPS, at an acquisition/deployment cost in the ballpark of a few hundred dollars. mark. On an annual basis of

These are lofty specifications for feasible inference scenarios running on edge devices. 100,000 queries/second, the total cost would

The crux of the matter centers on the memory bottleneck (also called the memory wall), exceed US$100 billion.

which increases latency, with a deleterious impact on implementation efficiency, energy con- Let’s evaluate the implication of the

sumption and cost. benchmarks on the cost of ownership of a

data center supporting ChatGPT-4 based on

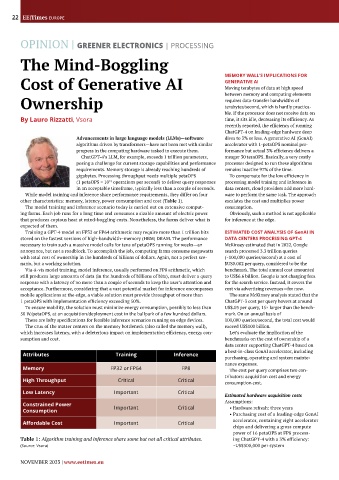

Attributes Training Inference a best-in-class GenAI accelerator, including

purchasing, operating and system mainte-

nance expenses.

Memory FP32 or FP64 FP8 The cost per query comprises two con-

tributors: acquisition cost and energy

High Throughput Critical Critical consumption cost.

Low Latency Important Critical

Estimated hardware acquisition costs

Constrained Power Assumptions:

Consumption Important Critical • Hardware refresh: three years

• Purchasing cost of a leading-edge GenAI

Affordable Cost Important Critical accelerator, containing eight accelerator

chips and delivering a gross compute

power of 16 petaOPS at FP8 process-

Table 1: Algorithm training and inference share some but not all critical attributes. ing ChatGPT-4 with a 3% efficiency:

(Source: Vsora) ~US$500,000 per system

NOVEMBER 2023 | www.eetimes.eu